REVIEW

of

FINANCE -

Apr. 2018

43

Binary Logistic Regression Analysis

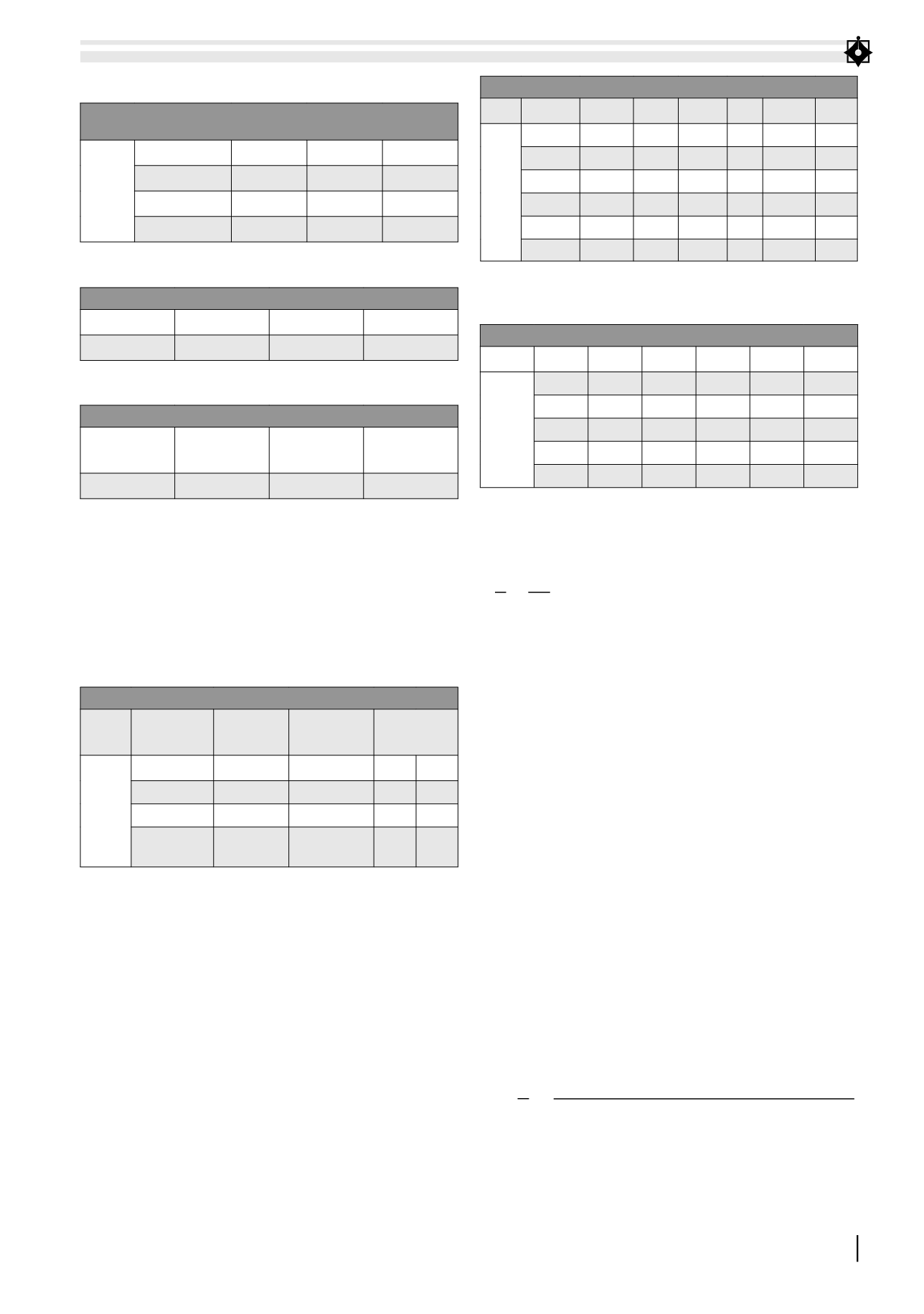

TABLE 3. RELIABILTY TEST FOR BINARY LOGISTIC REGRESSION

OMNIBUS TESTS OF MODEL COEFFICIENTS

Step 1 Chi-square

Df

Sig.

Step

71.072

8

.000

Block

71.072

8

.000

Model

71.072

8

.000

HOSMER AND LEMESHOW TEST

Step

Chi-square

df

Sig.

1

11.154

8

.193

MODEL SUMMARY

Step

-2 Log

likelihood

Cox & Snell

R Square

Nagelkerke

R Square

1

281.382a

.470

.627

a. Estimation terminated at iteration number 6 because

parameter estimates changed by less than .001.

Table 3 shows that the Omnibus Test of Model

coeffiencent with Sig.< 0.05 and Hosmer and

Lemeshow Test with Sig. > 0.05 together with the

-2 Log Likelihood is 281.382a, which defines that

the model is fit to the data.

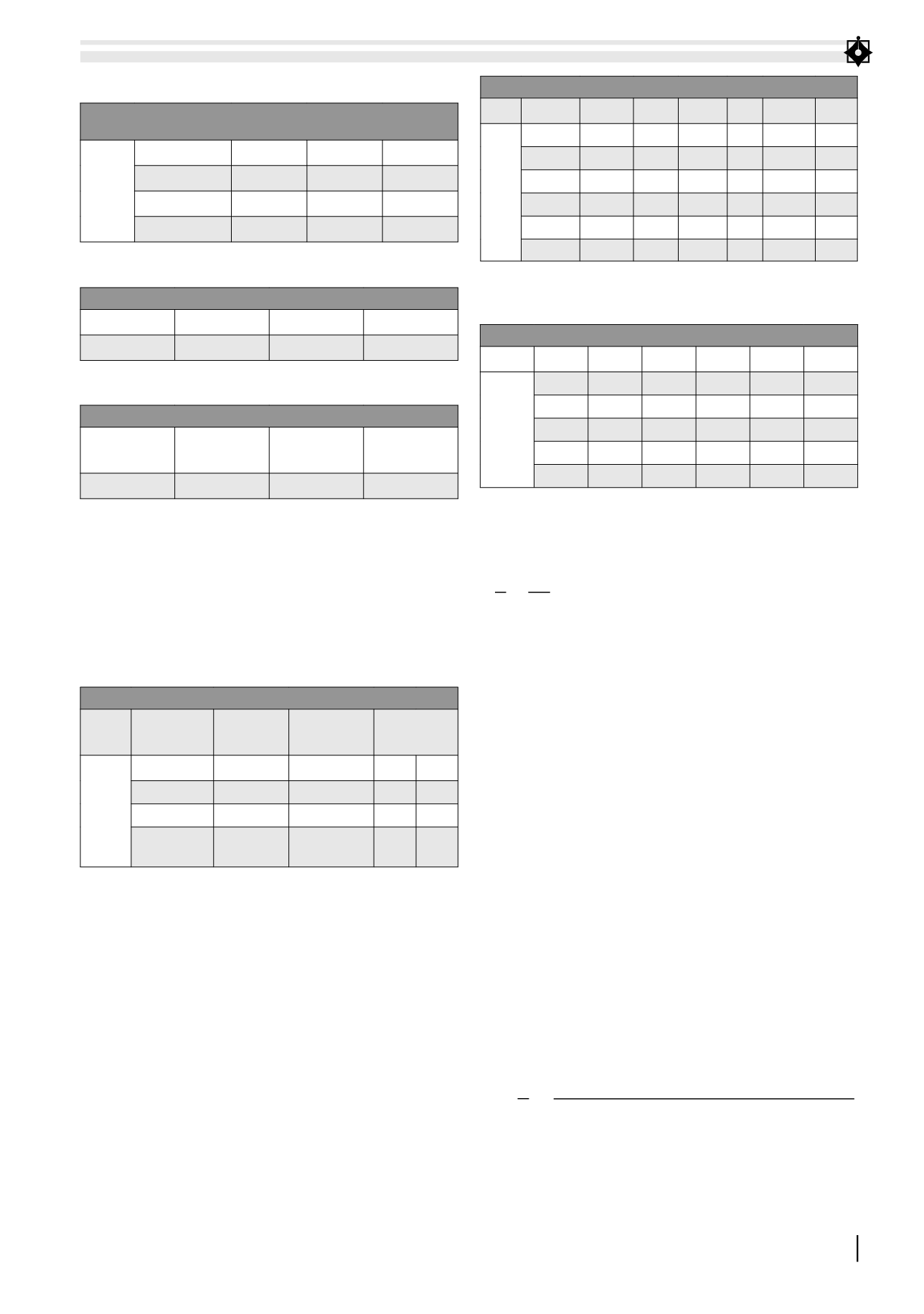

TABLE 4. CLASSIFICATION TABLEA

Observed Predicted Decision Percentage

Correct

Step 1

No

Yes

Decision

No

165

34 82.9

Yes

28

149 84.2

Overall

Percentage

83.5

a. The cut value is .500

Source: Authors

The table 4 shows that 165 cases are correctly

predicted be “No” among the 199 cases observed,

approximately 82.9%; and 149 cases are correctly

predicted be “Yes” among the 177 case observed,

approximately 84.2%. Therefore, the overall

percentage of themodel is approximated to be 83.5%.

From these results, all five parameters have

added significantly to the model (Sig. < 0.05).

There should be no high intercorrelations

(multicollinearity) among the predictors. This

can be assessed by a correlation matrix among

the predictors because correlation coefficients

among independent variables amount to less

than 0.8.

TABLE 5. VARIABLES IN THE EQUATION

B S.E.

Wald Df Sig. Exp(B)

Step

1a

PC 1.270 .196 41.847 1 .000 3.561

JC .455 .172 7.030 1 .008 1.576

UC .708 .241 8.631 1 .003 2.029

FP 1.243 .276 20.275 1 .000 3.465

CD 1.944 .302 41.444 1 .000 6.984

Constant

-19.952 2.102 90.057 1 .000 .000

a. Variable(s) entered on step 1: PC,JC,UC,FP,CD

CORRELATION MATRIX

PC

JC

UC

FP CD

Step 1

PC 1.000 -.054 -.113 .074 .279

JC -.054 1.000 .028 -.117 .043

UC -.113 .028 1.000 -.063 .153

FP .074 -.117 -.063 1.000 .126

CD .279 .043 .153 .126 1.000

Source: Authors

Linear regression predicts the value that Y

takes.

E(

Y

X

i

) =

P

1−P

= e

(-19.952 + 1.27PC +0.455JC + 0.708UC + 1.243FP + 1.944CD)

P: probability of Y occuring

Given that the other variables don’t change at

the same time, everytime Personal Characteristics

increases by 1 unit, the probability of the Decision

to choose Accounting as a major increases by 1.27

times; Job characteristics increases by 1 unit will

make the probability of the Decision to choose

Accounting as a major increase by 0.455 times;

University characteristics increase by 1 unit will

cause the probability of the Decision to choose

Accounting as a major to increase by 0.708 times;

Family members and peers increases by 1 unit

will also drive up the probability of the Decision

to choose Accounting as major by 1.243 times;

and finally Communication and Labour demand

increases by 1 unit will increase the probability

of the Decision to choose Accounting as major by

1.944 times.

=

݁

−19.952 + 1.27PC +0.455JC + 0.708UC + 1.243FP + 1.944CD )

1 + ݁

−19.952 + 1.27PC +0.455JC + 0.708UC + 1.243FP + 1.944CD )

P(

Y

X

i

)

Xi: Predicts the probability of Y at Xi

Assumption that a person has the average

value of PC = 3.7, JC=3.8, UC=3.4, FP=4.0, CD=4.0,

so the probability of Y is: